We share the latest updated Microsoft DP-300 test questions and answers for free, all test questions are real cracked, guaranteed to be true and effective! You can practice the test online!

Or download the latest DP-300 exam pdf. The free exam questions are only part of what we share. If you want to get the complete Microsoft DP-300 exam questions and answers, you can get them in leads4pass.

The leads4pass DP-300 exam dumps contain VCE dumps and PDF dumps.

Microsoft DP-300 Exam “Administering Relational Databases on Microsoft Azure” https://www.leads4pass.com/dp-300.html (Total Questions: 142 Q&A)

Get FREE Microsoft DP-300 pdf from leads4pass for free

Free share Microsoft DP-300 exam PDF from Fulldumps provided by leads4pass

https://www.fulldumps.com/wp-content/uploads/2021/05/leads4pass-Microsoft-Data-DP-300-Exam-Dumps-Braindumps-PDF-VCE.pdf

Microsoft DP-300 exam questions online practice test

QUESTION 1

You have two Azure SQL Database servers named Server1 and Server2. Each server contains an Azure SQL database

named Database1.

You need to restore Database1 from Server1 to Server2. The solution must replace the existing Database1 on Server2.

Solution: You run the Remove-AzSqlDatabase PowerShell cmdlet for Database1 on Server2. You run the RestoreAzSqlDatabase PowerShell cmdlet for Database1 on Server2.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Instead restore Database1 from Server1 to the Server2 by using the RESTORE Transact-SQL command and the

REPLACE option.

Note: REPLACE should be used rarely and only after careful consideration. Restore normally prevents accidentally

overwriting a database with a different database. If the database specified in a RESTORE statement already exists on

the current server and the specified database family GUID differs from the database family GUID recorded in the

backup set, the database is not restored. This is an important safeguard.

Reference: https://docs.microsoft.com/en-us/sql/t-sql/statements/restore-statements-transact-sql

QUESTION 2

You have an Azure SQL database named Sales.

You need to implement disaster recovery for Sales to meet the following requirements:

During normal operations, provide at least two readable copies of Sales.

Ensure that Sales remains available if a datacenter fails.

Solution: You deploy an Azure SQL database that uses the General Purpose service tier and failover groups.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Instead deploy an Azure SQL database that uses the Business Critical service tier and Availability Zones.

Note: Premium and Business Critical service tiers leverage the Premium availability model, which integrates compute

resources (sqlservr.exe process) and storage (locally attached SSD) on a single node. High availability is achieved by

replicating both compute and storage to additional nodes creating a three to four-node cluster.

By default, the cluster of nodes for the premium availability model is created in the same datacenter. With the

introduction of Azure Availability Zones, SQL Database can place different replicas of the Business Critical database to

different availability zones in the same region. To eliminate a single point of failure, the control ring is also duplicated

across multiple zones as three gateway rings (GW).

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/high-availability-sla

QUESTION 3

You have an Always On availability group deployed to Azure virtual machines. The availability group contains a

database named DB1 and has two nodes named SQL1 and SQL2. SQL1 is the primary replica.

You need to initiate a full backup of DB1 on SQL2.

Which statement should you run?

A. BACKUP DATABASE DB1 TO URL=\\’https://mystorageaccount.blob.core.windows.net/mycontainer/DB1.bak\\’ with

(Differential, STATS=5, COMPRESSION);

B. BACKUP DATABASE DB1 TO URL=\\’https://mystorageaccount.blob.core.windows.net/mycontainer/DB1.bak\\’ with

(COPY_ONLY, STATS=5, COMPRESSION);

C. BACKUP DATABASE DB1 TO URL=\\’https://mystorageaccount.blob.core.windows.net/mycontainer/DB1.bak\\’ with

(File_Snapshot, STATS=5, COMPRESSION);

D. BACKUP DATABASE DB1 TO URL=\\’https://mystorageaccount.blob.core.windows.net/mycontainer/DB1.bak\\’ with

(NoInit, STATS=5, COMPRESSION);

Correct Answer: B

BACKUP DATABASE supports only copy-only full backups of databases, files, or filegroups when it\\’s executed on

secondary replicas. Copy-only backups don\\’t impact the log chain or clear the differential bitmap. Incorrect Answers:

A: Differential backups are not supported on secondary replicas. The software displays this error because the

secondary replicas support copy-only database backups.

Reference: https://docs.microsoft.com/en-us/sql/database-engine/availability-groups/windows/active-secondariesbackup-on-secondary-replicas-always-on-availability-groups

QUESTION 4

You receive numerous alerts from Azure Monitor for an Azure SQL database.

You need to reduce the number of alerts. You must only receive alerts if there is a significant change in usage patterns

for an extended period.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Set Threshold Sensitivity to High

B. Set the Alert logic threshold to Dynamic

C. Set the Alert logic threshold to Static

D. Set Threshold Sensitivity to Low

E. Set Force Plan to On

Correct Answer: BD

B: Dynamic Thresholds continuously learns the data of the metric series and tries to model it using a set of algorithms

and methods. It detects patterns in the data such as seasonality (Hourly / Daily / Weekly), and is able to handle noisy

metrics (such as machine CPU or memory) as well as metrics with low dispersion (such as availability and error rate).

D: Alert threshold sensitivity is a high-level concept that controls the amount of deviation from metric behavior required

to trigger an alert.

Low

QUESTION 5

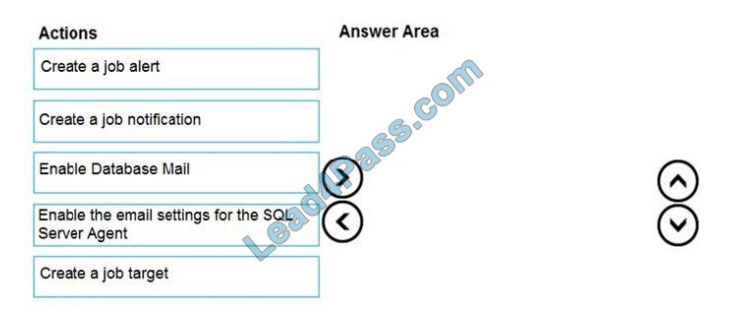

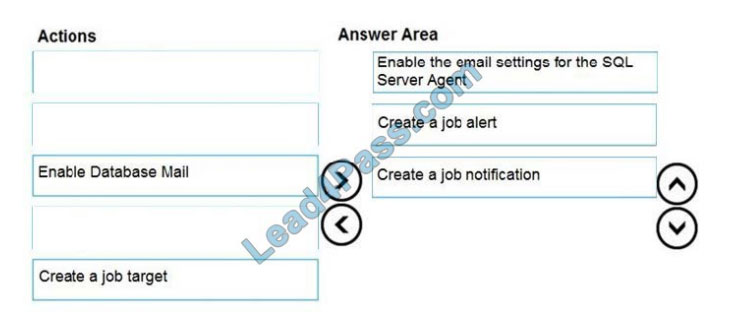

DRAG DROP

You have SQL Server on an Azure virtual machine named SQL1.

SQL1 has an agent job to back up all databases.

You add a user named dbadmin1 as a SQL Server Agent operator.

You need to ensure that dbadmin1 receives an email alert if a job fails.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions

to the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

Step 1: Enable the email settings for the SQL Server Agent.

To send a notification in response to an alert, you must first configure SQL Server Agent to send mail.

Step 2: Create a job alert

Step 3: Create a job notification

Example:

— adds an e-mail notification for the specified alert (Test Alert)

— This example assumes that Test Alert already exists

— and that François Ajenstat is a valid operator name.

USE msdb ;

GO

EXEC dbo.sp_add_notification

@alert_name = N\\’Test Alert\\’,

@operator_name = N\\’François Ajenstat\\’,

@notification_method = 1 ;

GO

Reference:

https://docs.microsoft.com/en-us/sql/ssms/agent/notify-an-operator-of-job-status

https://docs.microsoft.com/en-us/sql/ssms/agent/assign-alerts-to-an-operator

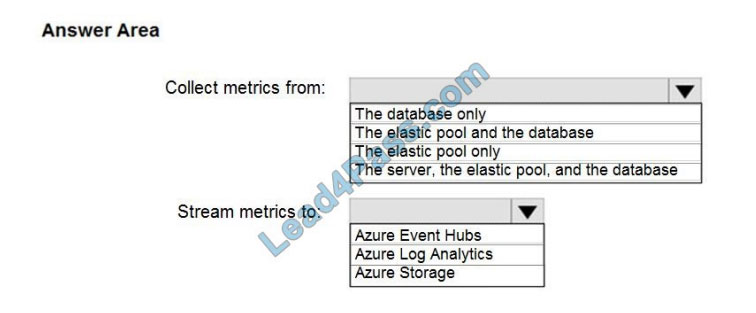

QUESTION 6

HOTSPOT

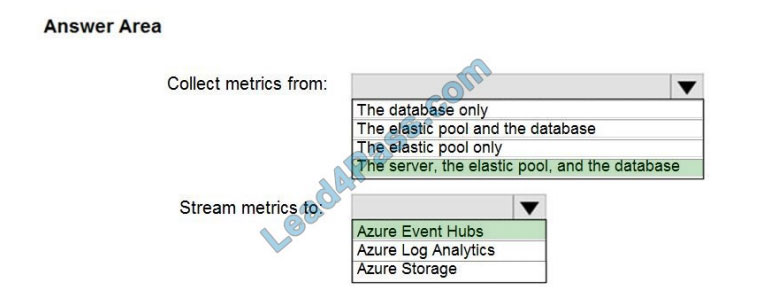

You need to implement the monitoring of SalesSQLDb1. The solution must meet the technical requirements.

How should you collect and stream metrics? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: The server, the elastic pool, and the database Senario:

SalesSQLDb1 is in an elastic pool named SalesSQLDb1Pool.

Litware technical requirements include: all SQL Server and Azure SQL Database metrics related to CPU and storage

usage and limits must be analyzed by using Azure built-in functionality.

Box 2: Azure Event hubs

Scenario: Migrate ManufacturingSQLDb1 to the Azure virtual machine platform.

Event hubs are able to handle custom metrics.

Incorrect Answers:

Azure Log Analytics

Azure metric and log data are sent to Azure Monitor Logs, previously known as Azure Log Analytics, directly by Azure.

Azure SQL Analytics is a cloud only monitoring solution supporting streaming of diagnostics telemetry for all of your

Azure

SQL databases.

However, because Azure SQL Analytics does not use agents to connect to Azure Monitor, it does not support

monitoring of SQL Server hosted on-premises or in virtual machines.

QUESTION 7

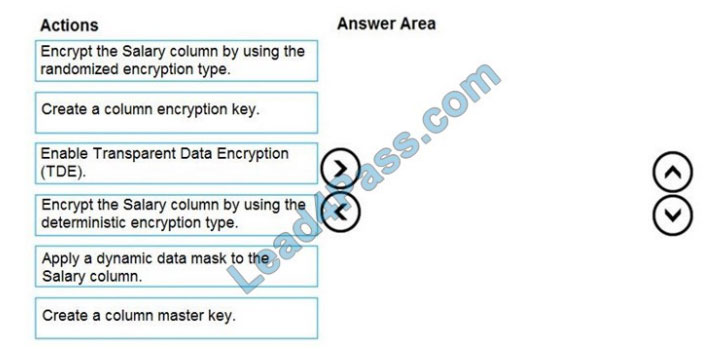

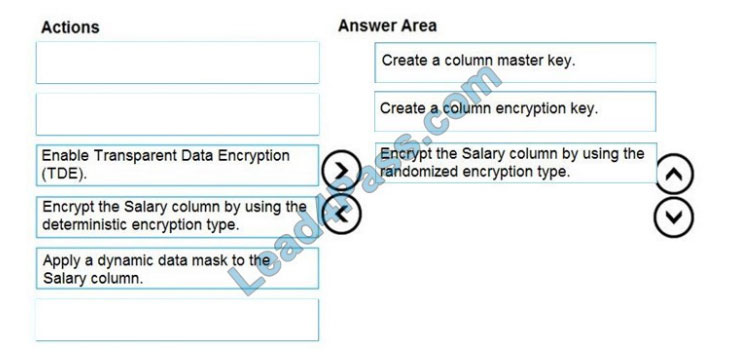

DRAG DROP

You have an Azure SQL database that contains a table named Employees. Employees contains a column named

Salary.

You need to encrypt the Salary column. The solution must prevent database administrators from reading the data in the

Salary column and must provide the most secure encryption.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions

to the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

Step 1: Create a column master key

Create a column master key metadata entry before you create a column encryption key metadata entry in the database

and before any column in the database can be encrypted using Always Encrypted.

Step 2: Create a column encryption key.

Step 3: Encrypt the Salary column by using the randomized encryption type.

Randomized encryption uses a method that encrypts data in a less predictable manner. Randomized encryption is more

secure, but prevents searching, grouping, indexing, and joining on encrypted columns.

Note: A column encryption key metadata object contains one or two encrypted values of a column encryption key that is

used to encrypt data in a column. Each value is encrypted using a column master key.

Incorrect Answers:

Deterministic encryption.

Deterministic encryption always generates the same encrypted value for any given plain text value. Using deterministic

encryption allows point lookups, equality joins, grouping and indexing on encrypted columns. However, it may also

allow

unauthorized users to guess information about encrypted values by examining patterns in the encrypted column,

especially if there\\’s a small set of possible encrypted values, such as True/False, or North/South/East/West region.

Reference:

https://docs.microsoft.com/en-us/sql/relational-databases/security/encryption/always-encrypted-database-engine

QUESTION 8

You have a Microsoft SQL Server 2019 instance in an on-premises datacenter. The instance contains a 4-TB database

named DB1.

You plan to migrate DB1 to an Azure SQL Database managed instance.

What should you use to minimize downtime and data loss during the migration?

A. distributed availability groups

B. database mirroring

C. log shipping

D. Database Migration Assistant

Correct Answer: A

The Data Migration Assistant (DMA) helps you upgrade to a modern data platform by detecting compatibility issues that

can impact database functionality in your new version of SQL Server or Azure SQL Database. DMA recommends

performance and reliability improvements for your target environment and allows you to move your schema, data, and

uncontained objects from your source server to your target server.

Note: SQL Managed Instance supports the following database migration options (currently these are the only supported

migration methods):

Azure Database Migration Service – migration with near-zero downtime.

Native RESTORE DATABASE FROM URL – uses native backups from SQL Server and requires some downtime.

Reference:

https://docs.microsoft.com/en-us/sql/dma/dma-overview

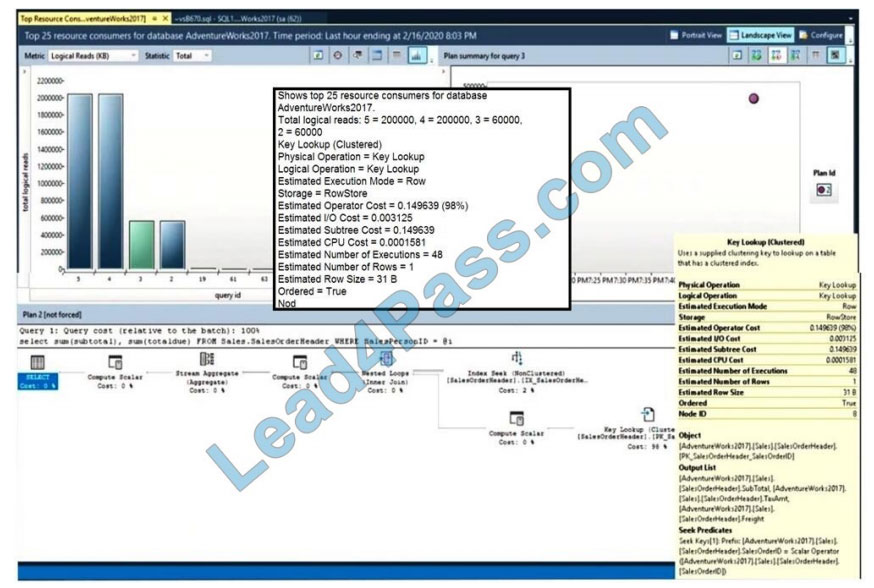

QUESTION 9

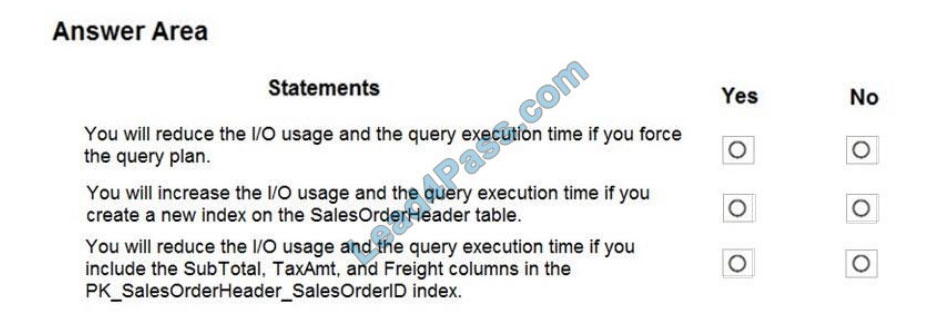

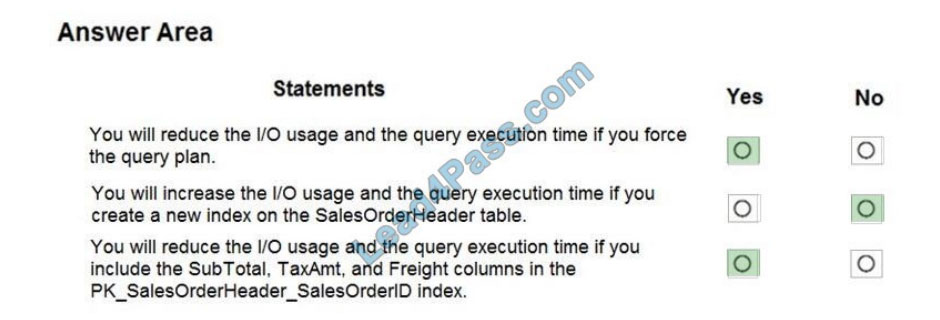

HOTSPOT

You have SQL Server on an Azure virtual machine.

You review the query plan shown in the following exhibit.

For each of the following statements, select yes if the statement is true. Otherwise, select no. NOTE: Each correct

selection is worth one point.

Hot Area:

Correct Answer:

QUESTION 10

You have SQL Server 2019 on an Azure virtual machine that runs Windows Server 2019. The virtual machine has 4

vCPUs and 28 GB of memory.

You scale up the virtual machine to 16 vCPUSs and 64 GB of memory.

You need to provide the lowest latency for tempdb.

What is the total number of data files that tempdb should contain?

A. 2

B. 4

C. 8

D. 64

Correct Answer: D

The number of files depends on the number of (logical) processors on the machine. As a general rule, if the number of

logical processors is less than or equal to eight, use the same number of data files as logical processors. If the number

of logical processors is greater than eight, use eight data files and then if contention continues, increase the number of

data files by multiples of 4 until the contention is reduced to acceptable levels or make changes to the workload/code.

Reference: https://docs.microsoft.com/en-us/sql/relational-databases/databases/tempdb-database

QUESTION 11

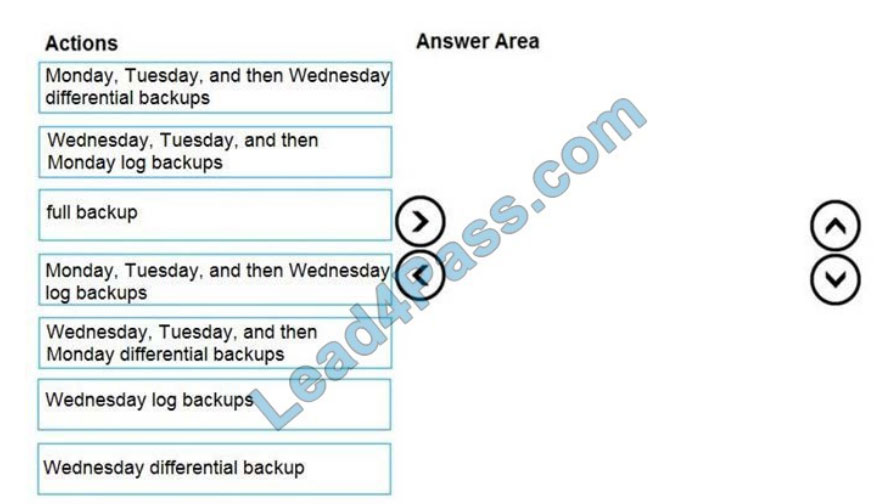

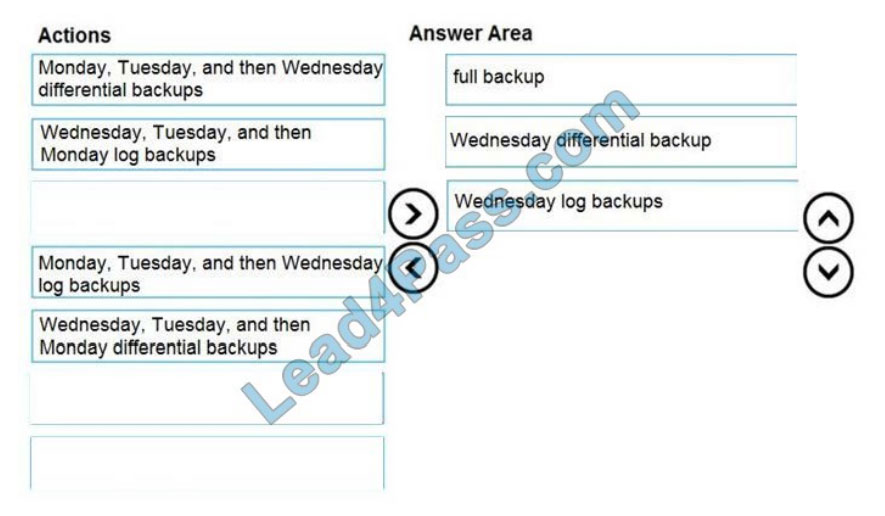

DRAG DROP

You have SQL Server on an Azure virtual machine that contains a database named DB1. DB1 is 30 TB and has a 1-GB

daily rate of change.

You back up the database by using a Microsoft SQL Server Agent job that runs Transact-SQL commands. You perform

a weekly full backup on Sunday, daily differential backups at 01:00, and transaction log backups every five minutes.

The database fails on Wednesday at 10:00.

Which three backups should you restore in sequence? To answer, move the appropriate backups from the list of

backups to the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

QUESTION 12

You are building a database backup solution for a SQL Server database hosted on an Azure virtual machine.

In the event of an Azure regional outage, you need to be able to restore the database backups. The solution must

minimize costs.

Which type of storage accounts should you use for the backups?

A. locally-redundant storage (LRS)

B. read-access geo-redundant storage (RA-GRS)

C. zone-redundant storage (ZRS)

D. geo-redundant storage

Correct Answer: B

Geo-redundant storage (with GRS or GZRS) replicates your data to another physical location in the secondary region to

protect against regional outages. However, that data is available to be read only if the customer or Microsoft initiates a

failover from the primary to secondary region. When you enable read access to the secondary region, your data is

available to be read if the primary region becomes unavailable. For read access to the secondary region, enable readaccess geo-redundant storage (RA-GRS) or read-access geo-zone-redundant storage (RA-GZRS).

Incorrect Answers:

A: Locally redundant storage (LRS) copies your data synchronously three times within a single physical location in the

primary region. LRS is the least expensive replication option, but is not recommended for applications requiring high

availability.

C: Zone-redundant storage (ZRS) copies your data synchronously across three Azure availability zones in the primary

region.

D: Geo-redundant storage (with GRS or GZRS) replicates your data to another physical location in the secondary region

to protect against regional outages. However, that data is available to be read only if the customer or Microsoft initiates

a failover from the primary to secondary region.

Reference: https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy

QUESTION 13

You plan to move two 100-GB databases to Azure.

You need to dynamically scale resources consumption based on workloads. The solution must minimize downtime

during scaling operations.

What should you use?

A. An Azure SQL Database elastic pool

B. SQL Server on Azure virtual machines

C. an Azure SQL Database managed instance

D. Azure SQL databases

Correct Answer: A

Azure SQL Database elastic pools are a simple, cost-effective solution for managing and scaling multiple databases that

have varying and unpredictable usage demands. The databases in an elastic pool are on a single server and share a

set number of resources at a set price.

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/elastic-pool-overview

Thank you for reading! I have told you how to successfully pass the Microsoft DP-300 exam.

You can choose: https://www.leads4pass.com/dp-300.html to directly enter the DP-300 Exam dumps channel! Get the key to successfully pass the exam! Wish you happiness!

Get free Microsoft DP-300 exam PDF online: https://www.fulldumps.com/wp-content/uploads/2021/05/leads4pass-Microsoft-Data-DP-300-Exam-Dumps-Braindumps-PDF-VCE.pdf