This article mainly shares how to pass the Microsoft DP-300 exam. I will share the specific exam details through the link, and you can visit for more details.

Based on years of test experience, there are several modes to pass the DP-300 test:

First, study and practice for a long time to improve your own strength.

Second, pass the Microsoft Azure Database Administrator Associate exam dumps. Both methods will be shared on this site. Continue reading to get success!

Free Microsoft DP-300 exam practice questions

The test answer is at the end of the article

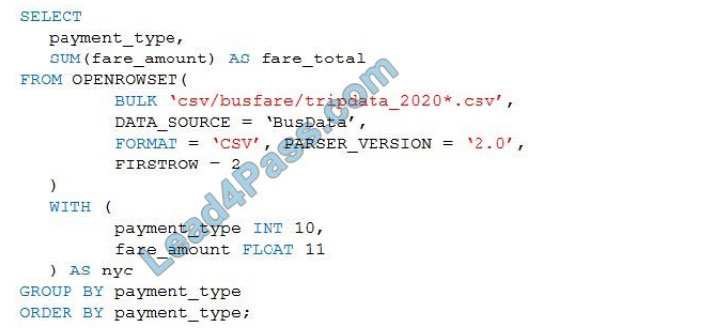

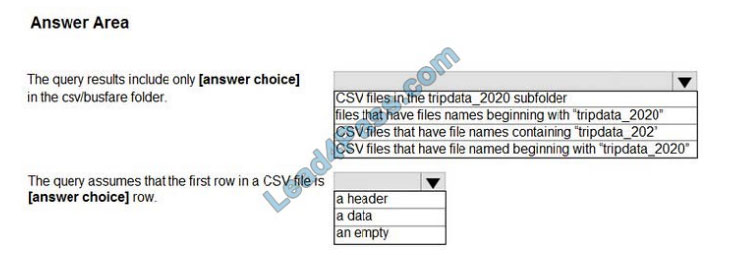

QUESTION 1

HOTSPOT

You are performing exploratory analysis of bus fare data in an Azure Data Lake Storage Gen2 account by using an

Azure Synapse Analytics serverless SQL pool.

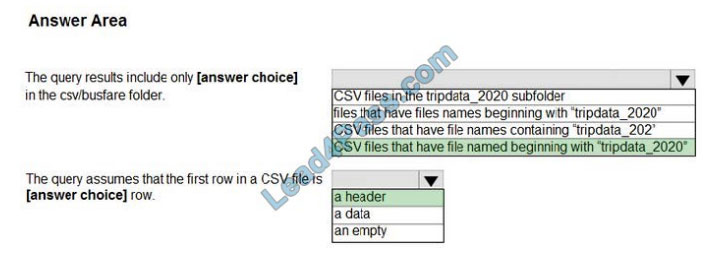

You execute the Transact-SQL query shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information

presented in the graphic.

Hot Area:

Correct Answer:

Box 1: CSV files that have file named beginning with “tripdata_2020”

Box 2: a header

FIRSTROW = \\’first_row\\’

Specifies the number of the first row to load. The default is 1 and indicates the first row in the specified data file. The row numbers are determined by counting the row terminators. FIRSTROW is 1-based.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-openrowset

QUESTION 2

You have an Azure Databricks resource.

You need to log actions that relate to changes in compute for the Databricks resource.

Which Databricks services should you log?

A. clusters

B. jobs

C. DBFS

D. SSH

E. workspace

Cloud Provider Infrastructure Logs.

Databricks logging allows security and admin teams to demonstrate conformance to data governance standards within

or from a Databricks workspace. Customers, especially in the regulated industries, also need records on activities like:

1. User access control to cloud data storage

2. Cloud Identity and Access Management roles

3. User access to cloud network and compute

Azure Databricks offers three distinct workloads on several VM Instances tailored for your data analytics workflow-the

Jobs Compute and Jobs Light Compute workloads make it easy for data engineers to build and execute jobs, and the

All-Purpose Compute workload makes it easy for data scientists to explore, visualize, manipulate, and share data and

insights interactively.

Reference: https://databricks.com/blog/2020/03/25/trust-but-verify-with-databricks.html

QUESTION 3

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some question sets might have more than one correct solution,

while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You have an Azure Data Lake Storage account that contains a staging zone.

You need to design a daily process to ingest incremental data from the staging zone, transform the data by executing an R script, and then insert the transformed data into a data warehouse in Azure Synapse Analytics.

Solution: You use an Azure Data Factory schedule trigger to execute a pipeline that executes mapping data flow, and

then inserts the data into the data warehouse.

Does this meet the goal?

A. Yes

B. No

If you need to transform data in a way that is not supported by Data Factory, you can create a custom activity, not a

mapping flow,5 with your own data processing logic and use the activity in the pipeline. You can create a custom activity to run R scripts on your HDInsight cluster with R installed.

Reference: https://docs.microsoft.com/en-US/azure/data-factory/transform-data

QUESTION 4

You have an Azure SQL database. The database contains a table that uses a columnstore index and is accessed

infrequently.

You enable columnstore archival compression.

What are two possible results of the configuration? Each correct answer presents a complete solution. NOTE: Each

correct selection is worth one point.

A. Queries that use the index will consume more disk I/O.

B. Queries that use the index will retrieve fewer data pages.

C. The index will consume more disk space.

D. The index will consume more memory.

E. Queries that use the index will consume more CPU resources.

For rowstore tables and indexes, use the data compression feature to help reduce the size of the database. In addition

to saving space, data compression can help improve performance of I/O intensive workloads because the data is stored in fewer pages and queries need to read fewer pages from disk.

Use columnstore archival compression to further reduce the data size for situations when you can afford extra time and

CPU resources to store and retrieve the data.

QUESTION 5

You have an Always On availability group deployed to Azure virtual machines. The availability group contains a

database named DB1 and has two nodes named SQL1 and SQL2. SQL1 is the primary replica.

You need to initiate a full backup of DB1 on SQL2.

Which statement should you run?

A. BACKUP DATABASE DB1 TO URL=\\’https://mystorageaccount.blob.core.windows.net/ mycontainer/DB1.bak\\’ with

(Differential, STATS=5, COMPRESSION);

B. BACKUP DATABASE DB1 TO URL=\\’https://mystorageaccount.blob.core.windows.net/ mycontainer/DB1.bak\\’ with

(COPY_ONLY, STATS=5, COMPRESSION);

C. BACKUP DATABASE DB1 TO URL=\\’https://mystorageaccount.blob.core.windows.net/ mycontainer/DB1.bak\\’ with

(File_Snapshot, STATS=5, COMPRESSION);

D. BACKUP DATABASE DB1 TO URL=\\’https://mystorageaccount.blob.core.windows.net/ mycontainer/DB1.bak\\’ with

(NoInit, STATS=5, COMPRESSION);

BACKUP DATABASE supports only copy-only full backups of databases, files, or filegroups when it\\’s executed on

secondary replicas. Copy-only backups don\\’t impact the log chain or clear the differential bitmap. Incorrect Answers:

A: Differential backups are not supported on secondary replicas. The software displays this error because the

secondary replicas support copy-only database backups.

Reference:

https://docs.microsoft.com/en-us/sql/database-engine/availability-groups/windows/active-secondaries-backup-onsecondary-replicas-always-on-availability-groups

QUESTION 6

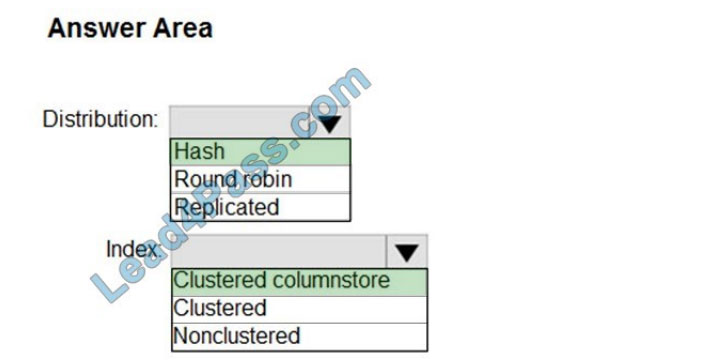

HOTSPOT

You are designing an enterprise data warehouse in Azure Synapse Analytics that will store website traffic analytics in a

star schema.

You plan to have a fact table for website visits. The table will be approximately 5 GB.

You need to recommend which distribution type and index type to use for the table. The solution must provide the

fastest query performance.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: Hash

Consider using a hash-distributed table when:

The table size on disk is more than 2 GB.

The table has frequent insert, update, and delete operations.

Box 2: Clustered columnstore

Clustered columnstore tables offer both the highest level of data compression and the best overall query performance.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-distribute

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-index

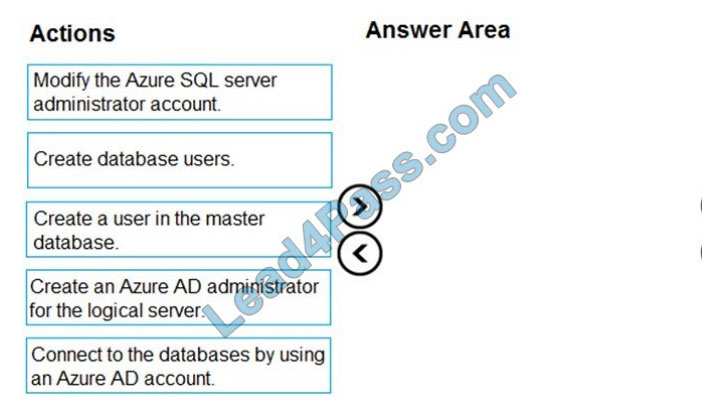

QUESTION 7

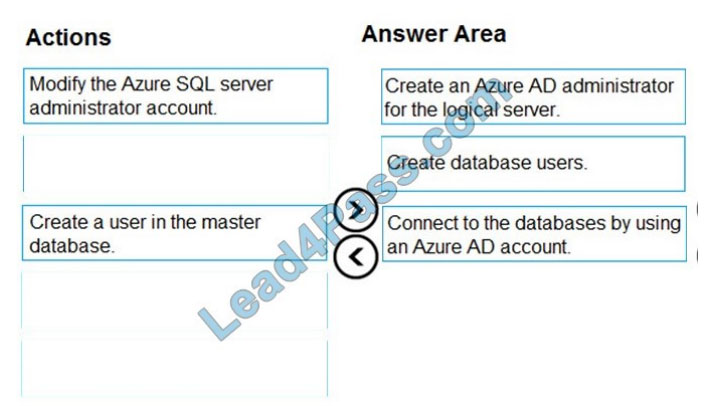

DRAG DROP

You need to configure user authentication for the SERVER1 databases. The solution must meet the security and

compliance requirements.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions

to the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

Scenario: Authenticate database users by using Active Directory credentials.

The configuration steps include the following procedures to configure and use Azure Active Directory authentication.

1. Create and populate Azure AD.

2. Optional: Associate or change the active directory that is currently associated with your Azure Subscription.

3. Create an Azure Active Directory administrator. (Step 1)

4. Configure your client computers.

5. Create contained database users in your database mapped to Azure AD identities. (Step 2)

6. Connect to your database by using Azure AD identities. (Step 3)

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/authentication-aad-overview

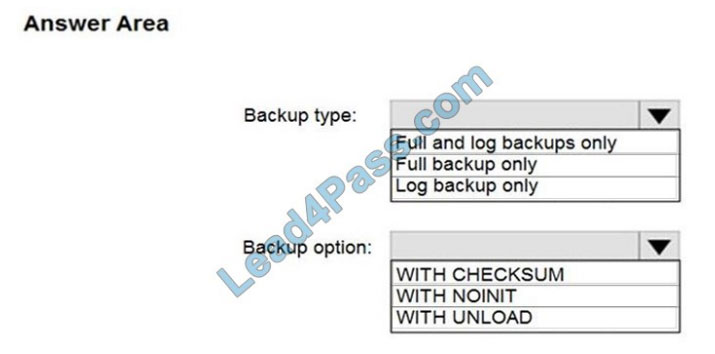

QUESTION 8

HOTSPOT

You have an on-premises Microsoft SQL Server 2016 server named Server1 that contains a database named DB1.

You need to perform an online migration of DB1 to an Azure SQL Database managed instance by using Azure

Database Migration Service.

How should you configure the backup of DB1? To answer, select the appropriate options in the answer area. NOTE:

Each correct selection is worth one point.

Hot Area:

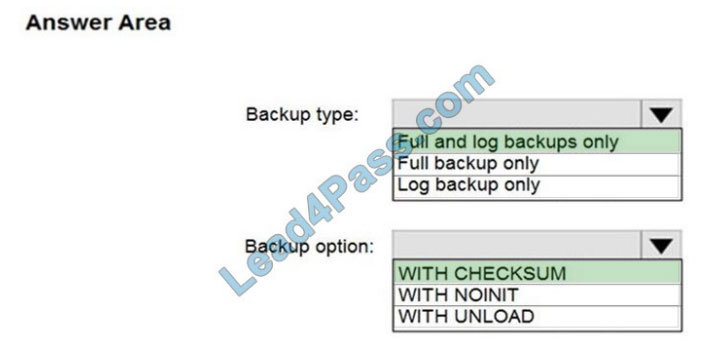

Correct Answer:

Box 1: Full and log backups only

Make sure to take every backup on a separate backup media (backup files). Azure Database Migration Service doesn\\’t

support backups that are appended to a single backup file. Take full backup and log backups to separate backup files.

Box 2: WITH CHECKSUM

Azure Database Migration Service uses the backup and restore method to migrate your on-premises databases to SQL

Managed Instance. Azure Database Migration Service only supports backups created using checksum.

Incorrect Answers:

NOINIT Indicates that the backup set is appended to the specified media set, preserving existing backup sets. If a

media password is defined for the media set, the password must be supplied. NOINIT is the default.

UNLOAD

Specifies that the tape is automatically rewound and unloaded when the backup is finished. UNLOAD is the default

when a session begins.

Reference:

https://docs.microsoft.com/en-us/azure/dms/known-issues-azure-sql-db-managed-instance-online

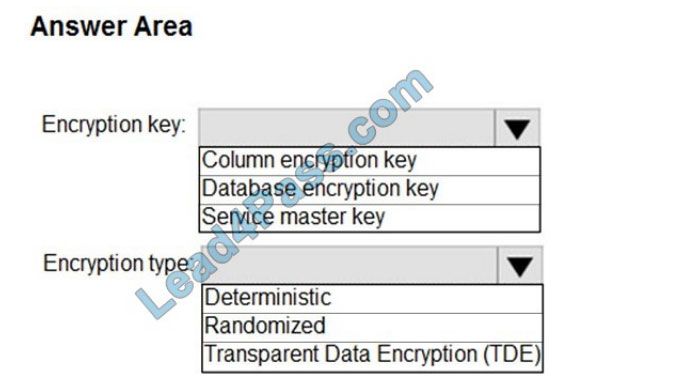

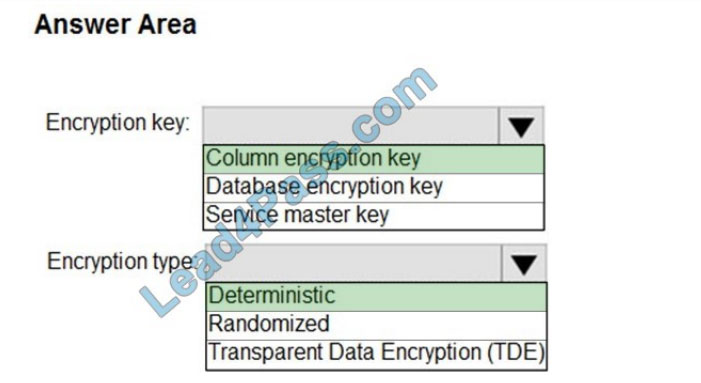

QUESTION 9

HOTSPOT

You have an Azure SQL database named DB1 that contains two tables named Table1 and Table2. Both tables contain

a column named a Column1. Column1 is used for joins by an application named App1.

You need to protect the contents of Column1 at rest, in transit, and in use.

How should you protect the contents of Column1? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Explanation:

Box 1: Column encryption Key

Always Encrypted uses two types of keys: column encryption keys and column master keys. A column encryption key is

used to encrypt data in an encrypted column. A column master key is a key-protecting key that encrypts one or more

column encryption keys.

Incorrect Answers:

TDE encrypts the storage of an entire database by using a symmetric key called the Database Encryption Key (DEK).

Box 2: Deterministic Always Encrypted is a feature designed to protect sensitive data, such as credit card numbers or national identification numbers (for example, U.S. social security numbers), stored in Azure SQL Database or SQL Server databases. Always Encrypted allows clients to encrypt sensitive data inside client applications and never reveal the encryption keys to the Database Engine (SQL Database or SQL Server).

Always Encrypted supports two types of encryption: randomized encryption and deterministic encryption.

Deterministic encryption always generates the same encrypted value for any given plain text value. Using deterministic

encryption allows point lookups, equality joins, grouping and indexing on encrypted columns.

Incorrect Answers:

1. Randomized encryption uses a method that encrypts data in a less predictable manner. Randomized encryption is more secure, but prevents searching, grouping, indexing, and joining on encrypted columns.

2. Transparent data encryption (TDE) helps protect Azure SQL Database, Azure SQL Managed Instance, and Azure

Synapse Analytics against the threat of malicious offline activity by encrypting data at rest. It performs real-time

encryption and decryption of the database, associated backups, and transaction log files at rest without requiring

changes to the application.

Reference: https://docs.microsoft.com/en-us/sql/relational-databases/security/encryption/always-encrypted-databaseengine

QUESTION 10

You have SQL Server on Azure virtual machines in an availability group.

You have a database named DB1 that is NOT in the availability group.

You create a full database backup of DB1.

You need to add DB1 to the availability group.

Which restore option should you use on the secondary replica?

A. Restore with Recovery

B. Restore with Norecovery

C. Restore with Standby

Prepare a secondary database for an Always On availability group requires two steps:

1. Restore a recent database backup of the primary database and subsequent log backups onto each server instance that hosts the secondary replica, using RESTORE WITH NORECOVERY

2. Join the restored database to the availability group.

Reference:

https://docs.microsoft.com/en-us/sql/database-engine/availability-groups/windows/manually-prepare-a- secondarydatabase-for-an-availability-group-sql-server

QUESTION 11

You need to implement the surrogate key for the retail store table. The solution must meet the sales transaction dataset requirements.

What should you create?

A. a table that has a FOREIGN KEYconstraint

B. a table the has an IDENTITY property

C. a user-defined SEQUENCE object

D. a system-versioned temporal table

Scenario: Contoso requirements for the sales transaction dataset include: Implement a surrogate key to account for

changes to the retail store addresses.

A surrogate key on a table is a column with a unique identifier for each row. The key is not generated from the table

data. Data modelers like to create surrogate keys on their tables when they design data warehouse models. You can

use the IDENTITY property to achieve this goal simply and effectively without affecting load performance.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tablesidentity

QUESTION 12

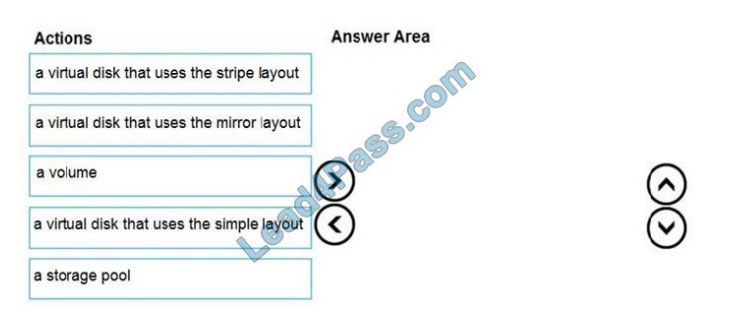

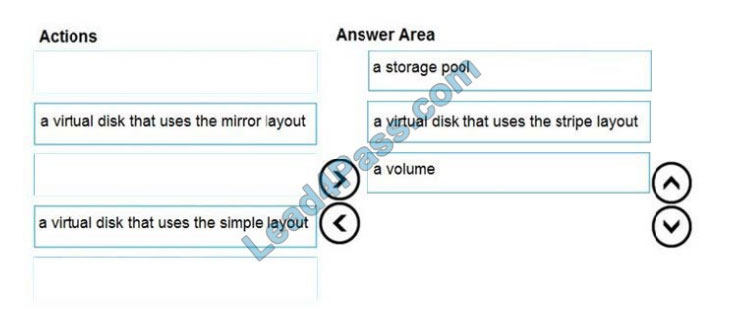

DRAG DROP

You are building an Azure virtual machine.

You allocate two 1-TiB, P30 premium storage disks to the virtual machine. Each disk provides 5,000 IOPS.

You plan to migrate an on-premises instance of Microsoft SQL Server to the virtual machine. The instance has a

database that contains a 1.2-TiB data file. The database requires 10,000 IOPS.

You need to configure storage for the virtual machine to support the database.

Which three objects should you create in sequence? To answer, move the appropriate objects from the list of objects to

the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

Follow these same steps to create striped virtual disk:

1. Create Log Storage Pool.

2. Create Virtual Disk

3. Create Volume Box 1: a storage pool Box 2: a virtual disk that uses stripe layout

Disk Striping: Use multiple disks and stripe them together to get a combined higher IOPS and Throughput limit. The

combined limit per VM should be higher than the combined limits of attached premium disks.

Box 3: a volume

Reference:

https://hanu.com/hanu-how-to-striping-of-disks-for-azure-sql-server/

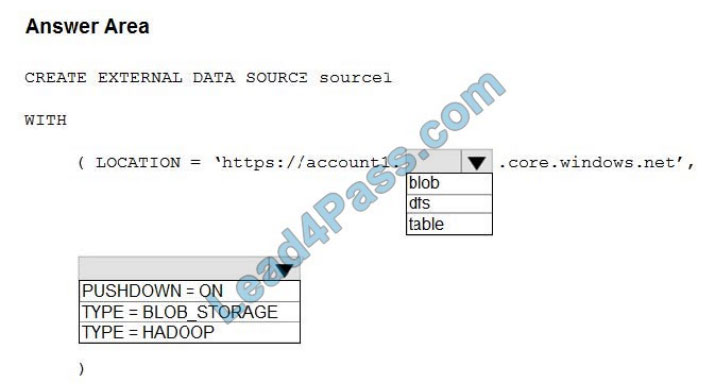

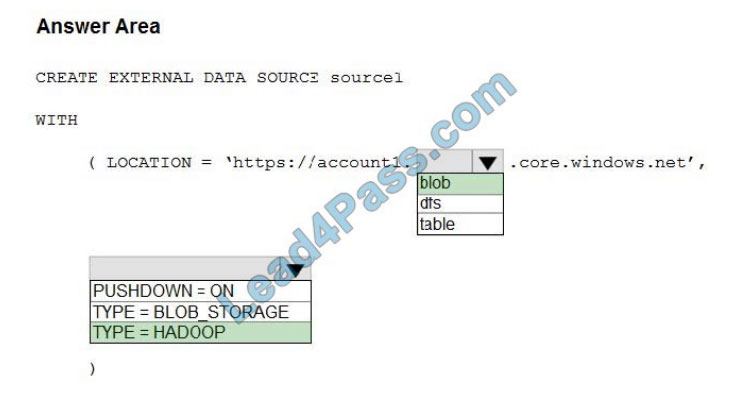

QUESTION 13

HOTSPOT

You have an Azure Synapse Analytics dedicated SQL pool named Pool1 and an Azure Data Lake Storage Gen2

account named Account1.

You plan to access the files in Account1 by using an external table.

You need to create a data source in Pool1 that you can reference when you create the external table.

How should you complete the Transact-SQL statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: blob The following example creates an external data source for Azure Data Lake Gen2 CREATE EXTERNAL

DATA SOURCE YellowTaxi WITH ( LOCATION =

\\’https://azureopendatastorage.blob.core.windows.net/nyctlc/yellow/\\’,

TYPE = HADOOP)

Box 2: HADOOP

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-tables-external-tables

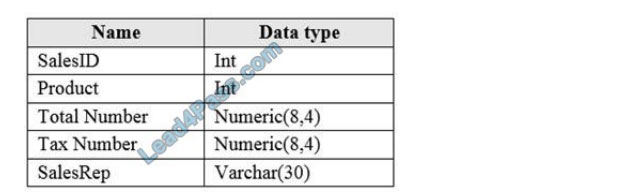

QUESTION 14

You have an Azure SQL database that contains a table named factSales. FactSales contains the columns shown in the

following table.

FactSales has 6 billion rows and is loaded nightly by using a batch process.

Which type of compression provides the greatest space reduction for the database?

A. page compression

B. row compression

C. columnstore compression

D. columnstore archival compression

Columnstore tables and indexes are always stored with columnstore compression. You can further reduce the size of

columnstore data by configuring an additional compression called archival compression.

Note: Columnstore – The columnstore index is also logically organized as a table with rows and columns, but the data is physically stored in a column-wise data format.

Incorrect Answers:

B: Rowstore – The rowstore index is the traditional style that has been around since the initial release of SQL Server.

For rowstore tables and indexes, use the data compression feature to help reduce the size of the database. Reference:

https://docs.microsoft.com/en-us/sql/relational-databases/data-compression/data-compression

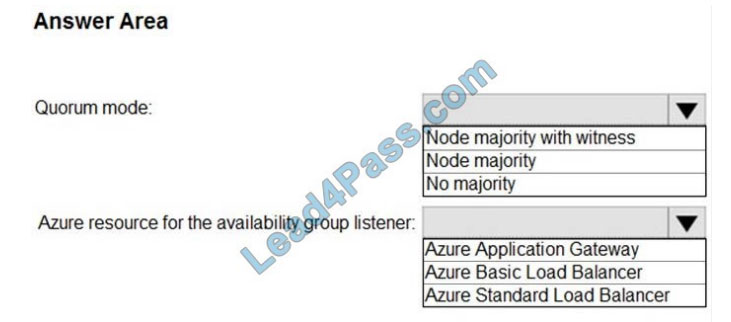

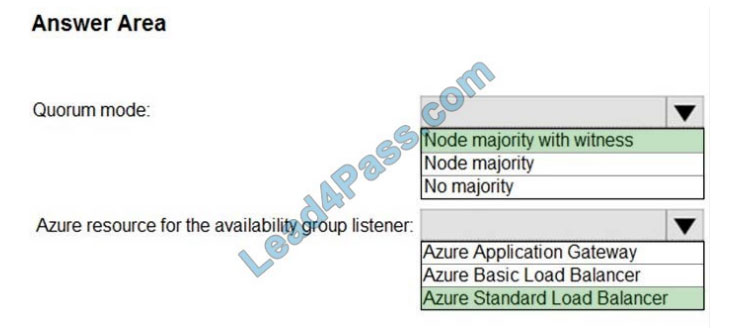

QUESTION 15

HOTSPOT

You need to recommend a configuration for ManufacturingSQLDb1 after the migration to Azure. The solution must meet the business requirements.

What should you include in the recommendation? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: Node majority with witness

As a general rule when you configure a quorum, the voting elements in the cluster should be an odd number. Therefore,

if the cluster contains an even number of voting nodes, you should configure a disk witness or a file share witness.

Note: Mode: Node majority with witness (disk or file share)

Nodes have votes. In addition, a quorum witness has a vote. The cluster quorum is the majority of voting nodes in the

active cluster membership plus a witness vote. A quorum witness can be a designated disk witness or a designated file

share witness.

Box 2: Azure Standard Load Balancer

Microsoft guarantees that a Load Balanced Endpoint using Azure Standard Load Balancer, serving two or more Healthy

Virtual Machine Instances, will be available 99.99% of the time.

Scenario: Business Requirements

Litware identifies business requirements include: meet an SLA of 99.99% availability for all Azure deployments.

Incorrect Aswers:

Basic Balancer: No SLA is provided for Basic Load Balancer.

Note: There are two main options for setting up your listener: external (public) or internal. The external (public) listener

uses an internet facing load balancer and is associated with a public Virtual IP (VIP) that is accessible over the internet.

An internal listener uses an internal load balancer and only supports clients within the same Virtual Network.

Reference:

https://technet.microsoft.com/windows-server-docs/failover-clustering/deploy-cloud-witness

https://azure.microsoft.com/en-us/support/legal/sla/load-balancer/v1_0/

Publish the answer:

| Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | Q7 | Q8 | Q9 | Q10 | Q11 | Q12 | Q13 | Q14 | Q15 |

| image | E | B | BE | B | image | image | image | image | B | B | image | image | D | image |

Microsoft DP-300 official practice test:https://www.mindhub.com/dp-300-administering-relational-databases-on-microsoft-azure-microsoft-official-practice-test/p/MU-DP-300?utm_source=microsoft&utm_medium=certpage&utm_campaign=msofficialpractice

[PDF] Microsoft DP-300 exam PDF download in Google Drive:https://drive.google.com/file/d/1XvH651-LSzW95b2rczWFkYhkxfKLZouY/

Microsoft Certified: Azure Database Administrator Associate – Microsoft DP-300 exam dumps

leads4pass DP-300 exam dumps: https://www.leads4pass.com/dp-300.html

Microsoft Ignite

In Microsoft Ignite you can know all the basic information about Microsoft exams

- Certification process overview

- Request accommodations

- Register and schedule an exam

- Prepare for an exam

- Exam duration and question types

- Exam scoring and score reports

- …