As of April 2025, the DP-420 exam content will be periodically updated to reflect technological advancements and new features in Azure Cosmos DB.

Leads4Pass Microsoft DP-420 exam preparation materials include 158 of the latest exam questions and answers: https://www.leads4pass.com/dp-420.html, ensuring that candidates who participate in the practice can successfully pass the exam.

The following are updates based on the latest exam content:

- New API Support: Enhanced features for APIs such as MongoDB API, Cassandra API, or Gremlin API.

- Performance Optimization: Updated indexing strategies, query optimization techniques, and partitioning strategies.

- Security Enhancements: Azure Cosmos DB now has new security features, such as integration with Azure Key Vault or stricter access controls.

- Multi-Region Replication: Improved configuration and management of globally distributed databases.

- AI and Analytics Integration: Tighter integration with Azure Synapse Analytics or other AI services.

The latest Microsoft DP-420 exam questions are shared online for free.

| Number of exam questions | Free Download | Compare |

| 15 | DP-420 PDF | Last shared |

Question 1:

You have a database named db1 in an Azure Cosmos DB Core (SQL) API account.

You have a third-party application that is exposed through a REST API.

You need to migrate data from the application to a container in db1 every week.

What should you use?

A. Database Migration Assistant

B. Azure Data Factory

C. Azure Migrate

Correct Answer: B

You can use Copy Activity in Azure Data Factory to copy data from and to Azure Cosmos DB (SQL API).

The Azure Cosmos DB (SQL API) connector is supported for the following activities:

Copy activity with supported source/sink matrix

Mapping data flow

Lookup activity

Incorrect:

Not A: Azure Migrate provides a centralized hub to assess and migrate on-premises servers, infrastructure, applications, and data to Azure. It assesses on-premises databases and migrates them to Azure SQL Database or SQL Managed

Instance.

Not C: Data Migration Assistant (DMA) enables you to upgrade to a modern data platform by detecting compatibility issues that can impact database functionality on your new version of SQL Server. It recommends performance and reliability

improvements for your target environment.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-cosmos-db

Question 2:

You have an Azure Cosmos DB for NoSQL account.

The change feed is enabled on a container named invoice.

You create an Azure function that has a trigger on the change feed.

What is received by the Azure function?

A. All the properties of the updated items

B. only the partition key and the changed properties of the updated items

C. All the properties of the original items and the updated items

D. only the changed properties and the system-defined properties of the updated items

Correct Answer: A

According to the Azure Cosmos DB documentation12, the change feed is a persistent record of changes to a container in the order they occur. The change feed outputs the sorted list of documents that were changed in the order in which they were modified.

The Azure function that has a trigger on the change feed receives all the properties of the updated items. The change feed does not include the original items or only the changed properties. The change feed also includes some system-defined properties such as _ts (the last modified timestamp) and _lsn (the logical sequence number)3.

Therefore, the correct answer is “all the properties of the updated items.”

Question 3:

HOTSPOT

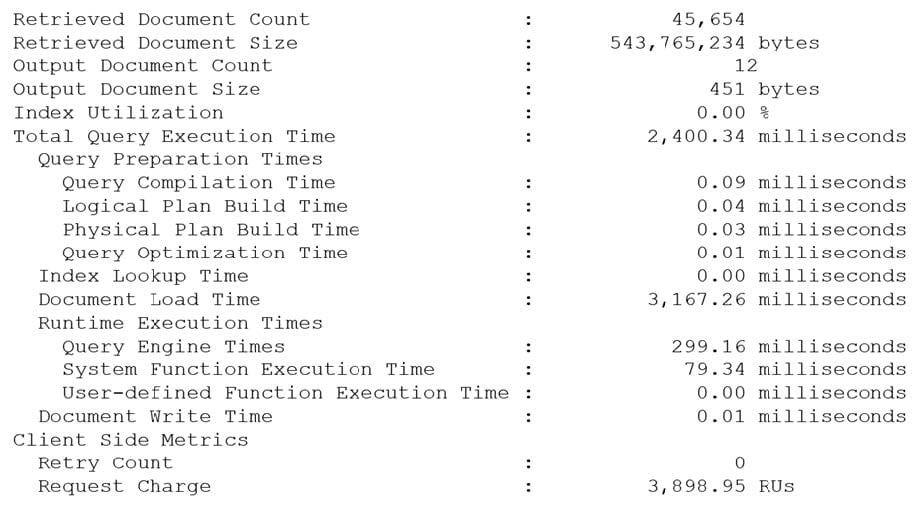

You have an Azure Cosmos DB Core (SQL) API account named account1.

In account1, you run the following query in a container that contains 100GB of data.

SELECT *

FROM c

WHERE LOWER(c.categoryid) = “hockey”

You view the following metrics while performing the query.

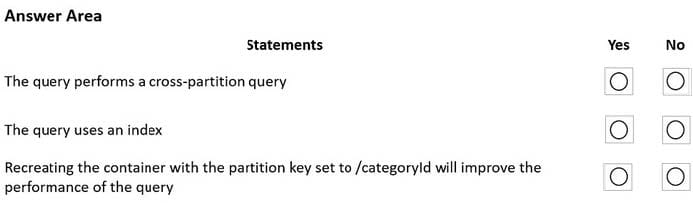

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: No

Each physical partition should have its index, but since no index is used, the query is not cross-partition.

Box 2: No

Index utilization is 0%, and Index lookup time is also zero.

Box 3: Yes

A partition key index will be created, and the query will be performed across the partitions.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/how-to-query-container

Question 4:

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account. Upserts of items in container 1 occur every three seconds.

You have an Azure Functions app named function1 that is supposed to run whenever items are inserted or replaced in container1.

You discover that function1 runs, but not on every upsert.

You need to ensure that function1 processes each upsert within one second of the upsert.

Which property should you change in the Function.json file of function1?

A. checkpointInterval

B. Lease Collections Throughput

C. maxItemsPerInvocation

D. feedPollDelay

Correct Answer: D

With an upsert operation, we can either insert or update an existing record at the same time.

FeedPollDelay: The time (in milliseconds) for the delay between polling a partition for new changes on the feed, after all current changes are drained. The default is 5,000 milliseconds, or 5 seconds.

Incorrect Answers:

A: checkpointInterval: When set, it defines, in milliseconds, the interval between lease checkpoints. The default is always after each Function call.

C: maxItemsPerInvocation: When set, this property sets the maximum number of items received per Function call. If operations in the monitored collection are performed through stored procedures, transaction scope is preserved when reading items from the change feed. As a result, the number of items received could be higher than the specified value, so that the items changed by the same transaction are returned as part of one atomic batch.

Reference: https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-cosmosdb-v2-trigger

Question 5:

You need to create a database in an Azure Cosmos DB Core (SQL) API account.

The database will contain three containers named coll1, coll2, and coll3. The coll1 container will have unpredictable read and write volumes.

The coll2 and coll3 containers will have predictable read and write volumes.

The expected maximum throughput for coll1 and coll2 is 50,000 request units per second (RU/s) each.

How should you provision the collection while minimizing costs?

A. Create a serverless account.

B. Create a provisioned throughput account. Set the throughput for coll1 to Autoscale. Set the throughput for coll2 and coll3 to Manual.

C. Create a provisioned throughput account. Set the throughput for coll1 to Manual. Set the throughput for coll2 and coll3 to Autoscale.

Correct Answer: B

Azure Cosmos DB offers two different capacity modes: provisioned throughput and serverless. Provisioned throughput mode allows you to configure a certain amount of throughput (expressed in Request Units per second or RU/s) that is

provisioned on your databases and containers. You get billed for the amount of throughput you\’ve provisioned, regardless of how many RUs were consumed. Serverless mode allows you to run your database operations without having to

configure any previously provisioned capacity. You get billed for the number of RUs that were consumed by your database operations and the storage consumed by your data. To create a database that minimizes costs, you should consider the following factors:

The read and write volumes of your containers

The predictability and variability of your traffic, the latency and throughput requirements of your application the geo-distribution and availability needs of your data. Based on these factors, one possible option that you could choose is B. Create a provisioned throughput account. Set the throughput for coll1 to Autoscale. Set the throughput for coll2 and coll3 to Manual.

This option has the following advantages:

It allows you to handle unpredictable read and write volumes for coll1 by using Autoscale, which automatically adjusts the provisioned throughput based on the current load1. It allows you to handle predictable read and write volumes for coll2 and coll3 by using Manual, which lets you specify a fixed amount of provisioned throughput that meets your performance needs1.

It allows you to optimize your costs by paying only for the throughput you need for each container1.

It allows you to enable geo-distribution for your account if you need to replicate your data across multiple regions1.

This option also has some limitations, such as:

It may not be suitable for scenarios where all containers have intermittent or bursty traffic that is hard to forecast or has a low average-to-peak ratio1. It may not be optimal for scenarios where all containers have low or sporadic traffic that does not justify provisioned capacity1.

It may not support availability zones or multi-master replication for your account1. Depending on your specific use case and requirements, you may need to choose a different option. For example, you could use a serverless account if all containers have low or sporadic traffic that does not require predictable performance or geo-distribution1. Alternatively, you could use a provisioned throughput account with Manual for all containers if all containers have stable and consistent traffic that requires predictable performance or geo-distribution1.

Question 6:

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Cosmos DB Core (SQL) API account named account 1 that uses autoscale throughput.

You need to run an Azure function when the normalized request units per second for a container in account1 exceeds a specific value.

Solution: You configure an application to use the change feed processor to read the change feed, and you configure the application to trigger the function.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Instead, configure an Azure Monitor alert to trigger the function.

You can set up alerts from the Azure Cosmos DB pane or the Azure Monitor service in the Azure portal.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/create-alerts

Question 7:

You have an Azure Cosmos DB for NoSQL account named account1 that supports an application named App1. App1 uses the consistent prefix consistency level.

You configure account1 to use a dedicated gateway and integrated cache.

You need to ensure that App1 can use the integrated cache.

Which two actions should you perform for APP1? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Change the connection mode to direct

B. Change the account endpoint to https://account1.sqlx.cosmos.azure.com.

C. Change the consistency level of requests to strong.

D. Change the consistency level of session requests.

E. Change the account endpoint to https://account1.documents.azure.com

Correct Answer: BD

The Azure Cosmos DB integrated cache is an in-memory cache that is built into the Azure Cosmos DB dedicated gateway. The dedicated gateway is a front-end compute that stores cached data and routes requests to the backend database.

You can choose from a variety of dedicated gateway sizes based on the number of cores and memory needed for your workload1. The integrated cache can reduce the RU consumption and latency of read operations by serving them from the cache instead of the backend containers2.

For your scenario, to ensure that App1 can use the integrated cache, you should perform these two actions:

Change the account endpoint to https://account1.sqlx.cosmos.azure.com. This is the dedicated gateway endpoint that you need to use to connect to your Azure Cosmos DB account and leverage the integrated cache. The standard gateway endpoint (https://account1.documents.azure.com) will not use the integrated cache2.

Change the consistency level of session requests. This is the highest consistency level that is supported by the integrated cache. If you use a higher consistency level (such as strong or bounded staleness), your requests will bypass the integrated cache and go directly to the backend containers

Question 8:

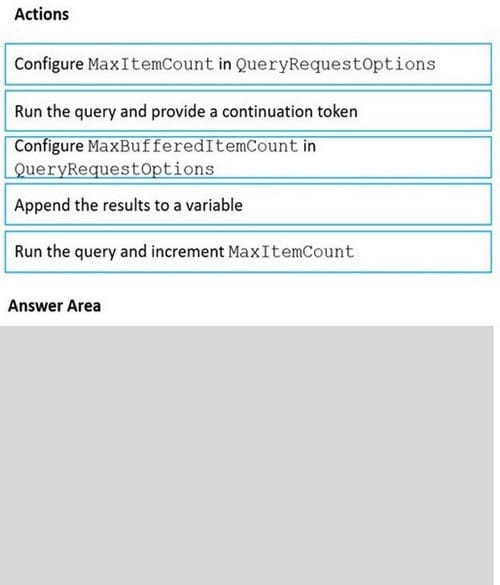

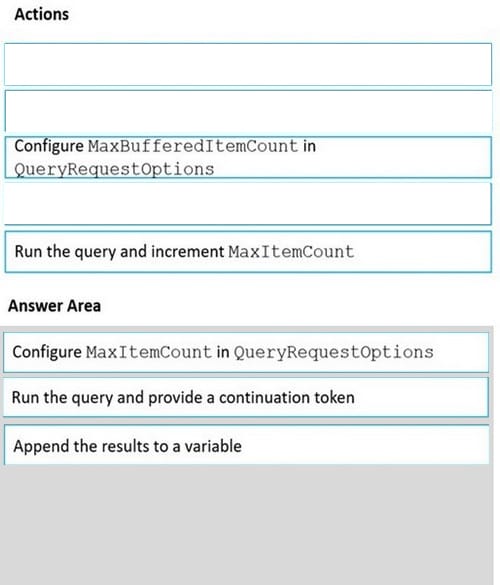

DRAG DROP

You have an app that stores data in an Azure Cosmos DB Core (SQL) API account. The app performs queries that return large result sets.

You need to return a complete result set to the app by using pagination. Each page of results must return 80 items.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

When DefaultTimeToLive is -1, then your Time to Live setting is On (No default)

Time to Live on a container, if present, and the value is set to “-1”, it is equal to infinity, and items don\’t expire by default.

Time to Live on an item:

This Property is applicable only if DefaultTimeToLive is present and it is not set to null for the parent container.

If present, it overrides the DefaultTimeToLive value of the parent container.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/time-to-liveExplanation:

Step 1: Configure the MaxItemCount in QueryRequestOptions

You can specify the maximum number of items returned by a query by setting the MaxItemCount. The MaxItemCount is specified per request and tells the query engine to return that number of items or fewer.

Box 2: Run the query and provide a continuation token

In the .NET SDK and Java SDK, you can optionally use continuation tokens as a bookmark for your query\’s progress. Azure Cosmos DB query executions are stateless at the server side and can be resumed at any time using the continuation token.

If the query returns a continuation token, then there are additional query results.

Step 3: Append the results to a variable

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/sql-query-pagination

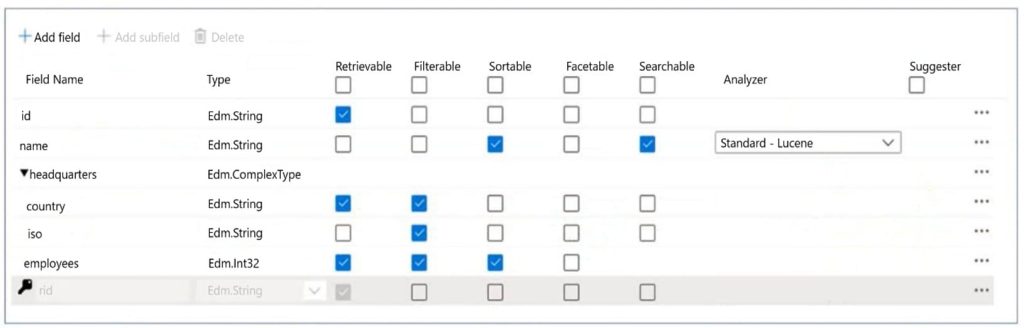

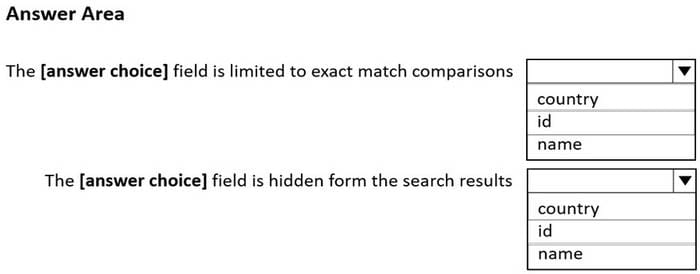

Question 9:

HOTSPOT

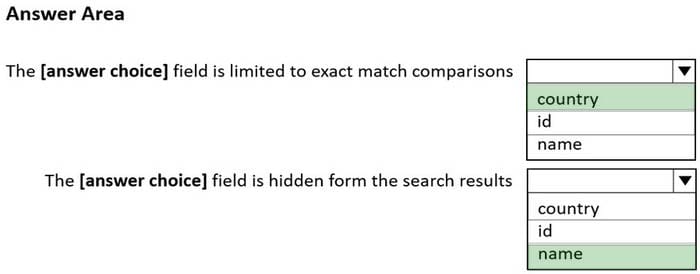

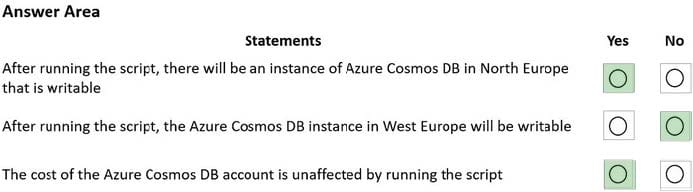

You configure Azure Cognitive Search to index a container in an Azure Cosmos DB Core (SQL) API account as shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: country

The country field is filterable.

Note: filterable: Indicates whether to enable the field to be referenced in $filter queries. Filterable differs from searchable in how strings are handled. Fields of type Edm.String or Collection(Edm.String) that are filterable do not undergo lexical

analysis, so comparisons are for exact matches only.

Box 2: name

The name field is not Retrievable.

Retrievable: Indicates whether the field can be returned in a search result. Set this attribute to false if you want to use a field (for example, margin) as a filter, sorting, or scoring mechanism but do not want the field to be visible to the end user.

Note: searchable: Indicates whether the field is full-text searchable and can be referenced in search queries.

Reference:

https://docs.microsoft.com/en-us/rest/api/searchservice/create-index

Question 10:

You need to implement a solution to meet the product catalog requirements. What should you do to implement the conflict resolution policy?

A. Remove the frequently changed field from the index policy of the con-product container.

B. Disable indexing on all fields in the index policy of the con-product container.

C. Set the default consistency level for account1 to eventual.

D. Create a new container and migrate the product catalog data to the new container.

Correct Answer: D

Question 11:

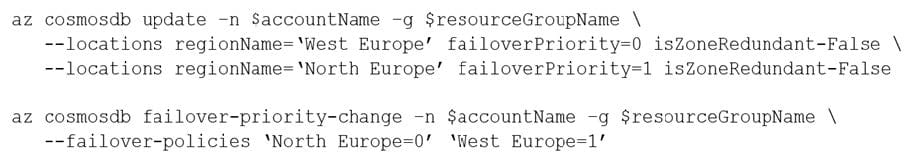

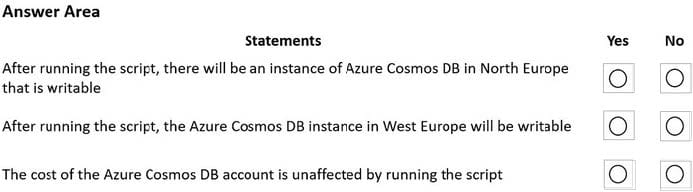

HOTSPOT

You have an Azure Cosmos DB Core (SQL) account that has a single write region in West Europe.

You run the following Azure CLI script.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: Yes

The Automatic failover option allows Azure Cosmos DB to fail over to the region with the highest failover priority with no user action should a region become unavailable.

Box 2: No

Western Europe is used for failover. Only North Europe is writable.

To Configure multi-region, set UseMultipleWriteLocations to true.

Box 3: Yes

Provisioned throughput with a single write region costs $0.008/hour per 100 RU/s, and provisioned throughput with multiple writable regions costs $0.016/per hour per 100 RU/s.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/how-to-multi-master https://docs.microsoft.com/en-us/azure/cosmos-db/optimize-cost-regions

Question 12:

You have an Azure Cosmos DB Core (SQL) API account named account1 that uses autoscale throughput.

You need to run an Azure function when the normalized request units per second for a container in account1 exceeds a specific value.

Solution: You configure Azure Event Grid to send events to the function by using an Event Grid trigger in the function.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Explanation:

Instead, configure an Azure Monitor alert to trigger the function.

You can set up alerts from the Azure Cosmos DB pane or the Azure Monitor service in the Azure portal.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/create-alerts

Question 13:

HOTSPOT

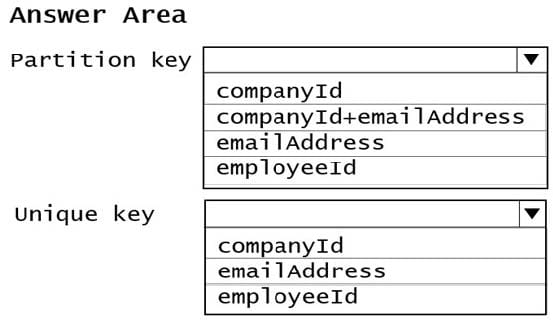

You have a database in an Azure Cosmos DB Core (SQL) API account.

You plan to create a container that will store employee data for 5,000 small businesses. Each business will have up to 25 employees. Each employee item will have an emailAddress value.

You need to ensure that the emailAddress value for each employee within the same company is unique.

To what should you set the partition key and the unique key? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

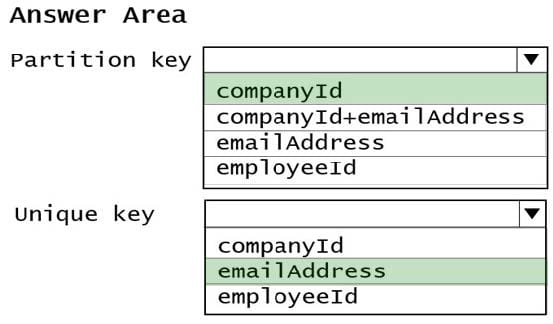

Correct Answer:

Box 1: CompanyID After you create a container with a unique key policy, the creation of a new or an update of an existing item resulting in a duplicate within a logical partition is prevented, as specified by the unique key constraint. The partition key combined with the unique key guarantees the uniqueness of an item within the scope of the container.

For example, consider an Azure Cosmos container with Email address as the unique key constraint and CompanyID as the partition key. When you configure the user\’s email address with a unique key, each item has a unique email address within a given CompanyID. Two items can’t be created with duplicate email addresses and with the same partition key value.

Box 2: emailAddress

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/unique-keys

Question 14:

You are implementing an Azure Data Factory data flow that will use an Azure Cosmos DB (SQL API) sink to write a dataset. The data flow will use 2,000 Apache Spark partitions. You need to ensure that the ingestion from each Spark partition is balanced to optimize throughput.

Which sink setting should you configure?

A. Throughput

B. Write the throughput budget

C. Batch size

D. Collection action

Correct Answer: C

Batch size: An integer that represents how many objects are being written to the Cosmos DB collection in each batch. Usually, starting with the default batch size is sufficient. To further tune this value, note:

Cosmos DB limits single request\’s size to 2 MB. The formula is “Request Size = Single Document Size * Batch Size”. If you hit an error saying “Request size is too large”, reduce the batch size value.

The larger the batch size, the better throughput the service can achieve, while making sure you allocate enough RUs to empower your workload.

Incorrect Answers:

A: Throughput: Set an optional value for the number of RUs you\’d like to apply to your CosmosDB collection for each execution of this data flow. Minimum is 400.

B: Write throughput budget: An integer that represents the RUs you want to allocate for this Data Flow write operation, out of the total throughput allocated to the collection.

D: Collection action: Determines whether to recreate the destination collection prior to writing.

None: No action will be taken on the collection. Recreate: The collection will get dropped and recreated

Reference: https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-cosmos-db

Question 15:

You have a database in an Azure Cosmos DB for NoSQL account that is configured for multi-region writes.

You need to use the Azure Cosmos DB SDK to implement the conflict resolution policy for a container. The solution must ensure that any conflict is sent to the conflict feed.

Solution: You set ConflictResolutionMode to Custom, and you use the default settings for the policy.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Setting ConflictResolutionMode to Custom and using the default settings for the policy will not ensure that conflicts are sent to the conflict feed. You need to define a custom stored procedure using the “conflictingItems” parameter to handle conflicts properly.

…

Total Questions: 158 Q&A

| Single & multiple choice | 97Q&As |

| Drag drop | 5Q&As |

| Hotspot | 56Q&As |

| Testlet | 1Q&As |

Download the complete Microsoft DP-420 exam questions and answers: https://www.leads4pass.com/dp-420.html. Choose either the PDF or VCE simulation engine to help you pass the exam successfully.

Summarize:

The Microsoft DP-420 certification exam will be periodically updated in phases to align with current technological advancements. We will synchronize with official updates in real-time, collecting, editing, and releasing them to all candidates to help everyone practice and pass the exam with ease. Additionally, at each phase, we will share some DP-420 exam questions for free and provide the latest information to our followers, helping you stay one step ahead.