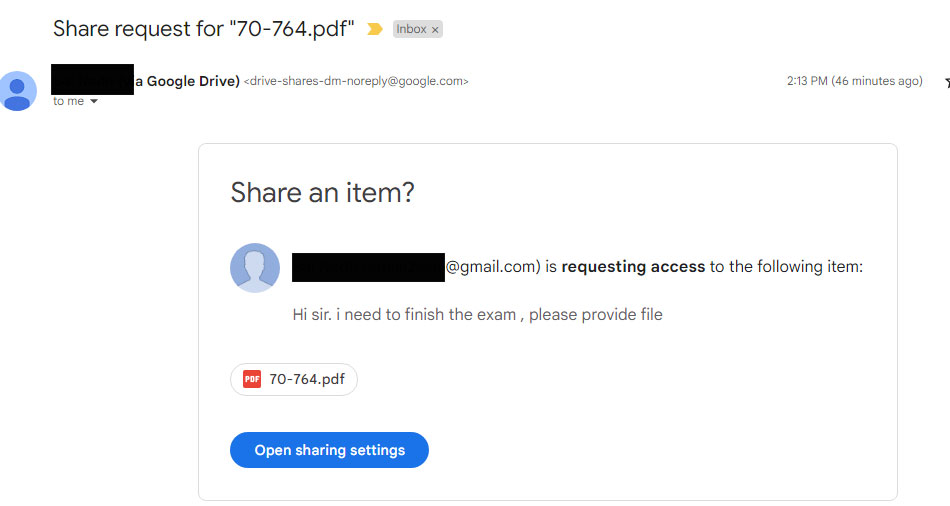

A friend who was years apart asked me for a 70-764 exam dumps, and through chat, I finally figured out why. and I ended up sharing the DP-300 exam dumps.

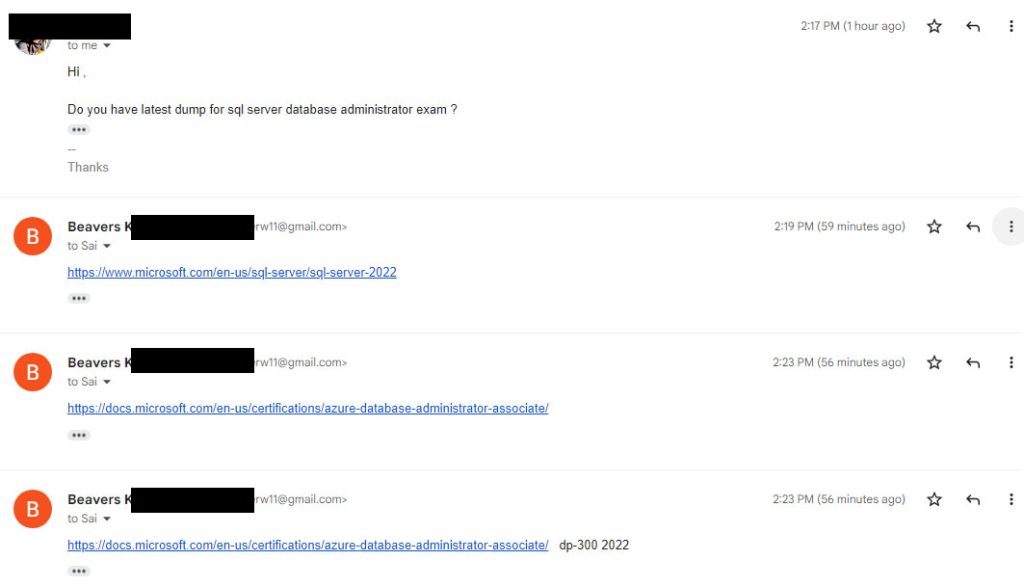

He didn’t know that the SQL database administrator exam had changed a lot.

Today, I will share our chat process, and share some important information with you here. I hope that people who have the same problem will not have the same problem again:

First of all, he asked me for SQL database administrator 70-764 exam dumps through my mailbox.

My first reaction was shocked! However, instead of keeping silent, I kindly told him that the 70-764 exam had expired.

And I shared several useful articles with him:

https://www.microsoft.com/en-us/sql-server/sql-server-2022

https://docs.microsoft.com/en-us/certifications/azure-database-administrator-associate/

[DP-300 2022]: https://docs.microsoft.com/en-us/certifications/azure-database-administrator-associate/

When he read the article, he knew all the information and asked me for the latest sql database administrator DP-300 exam questions.

Here is the latest DP-300 PDF I shared: https://drive.google.com/file/d/1w9T2CjFphFY4HVGblS5-8eOHKgtLL6y1/

I can only share some free DP-300 exam questions, this is my job, I hope everyone understands!

If you want the full DP-300 dumps with 209 exam questions and answers with illustrations: https://www.leads4pass.com/dp-300.html (PDF+VCE).

Contains 13 latest sql database administrator DP-300 exam questions shared online for everyone:

NEW QUESTION 1:

You have the following Azure Data Factory pipelines:

1. Ingest Data from System1

2. Ingest Data from System2

3. Populate Dimensions

4. Populate Facts

Ingest Data from System1 and Ingest Data from System2 have no dependencies. Populate Dimensions must execute after Ingest Data from System1 and Ingest Data from System2. Populate Facts must execute after the Populate Dimensions pipeline. All the pipelines must execute every eight hours.

What should you do to schedule the pipelines for execution?

A. Add a schedule trigger to all four pipelines.

B. Add an event trigger to all four pipelines.

C. Create a parent pipeline that contains the four pipelines and use an event trigger.

D. Create a parent pipeline that contains the four pipelines and use a schedule trigger.

Correct Answer: D

Reference: https://www.mssqltips.com/sqlservertip/6137/azure-data-factory-control-flow-activities-overview/

NEW QUESTION 2:

You have an Azure Synapse Analytics Apache Spark pool named Pool1.

You plan to load JSON files from an Azure Data Lake Storage Gen2 container into the tables in Pool1. The structure and data types vary by file.

You need to load the files into the tables. The solution must maintain the source data types.

What should you do?

A. Load the data by using PySpark.

B. Load the data by using the OPENROWSET Transact-SQL command in an Azure Synapse Analytics serverless SQL pool.

C. Use a Get Metadata activity in Azure Data Factory.

D. Use a Conditional Split transformation in an Azure Synapse data flow.

Correct Answer: B

Serverless SQL pool can automatically synchronize metadata from Apache Spark. A serverless SQL pool database will be created for each database existing in serverless Apache Spark pools. Serverless SQL pool enables you to query data in your data lake. It offers a T-SQL query surface area that accommodates semi-structured and unstructured data queries.

To support a smooth experience for in place querying of data that\’s located in Azure Storage files, serverless SQL pool uses the OPENROWSET function with additional capabilities.

The easiest way to see to the content of your JSON file is to provide the file URL to the OPENROWSET function, specify csv FORMAT.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/query-json-files

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/query-data-storage

NEW QUESTION 3:

You plan to move two 100-GB databases to Azure.

You need to dynamically scale resources consumption based on workloads. The solution must minimize downtime during scaling operations.

What should you use?

A. two Azure SQL Databases in an elastic pool

B. two databases hosted in SQL Server on an Azure virtual machine

C. two databases in an Azure SQL Managed instance

D. two single Azure SQL databases

Correct Answer: A

Azure SQL Database elastic pools are a simple, cost-effective solution for managing and scaling multiple databases that have varying and unpredictable usage demands. The databases in an elastic pool are on a single server and share a set number of resources at a set price.

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/elastic-pool-overview

NEW QUESTION 4:

You have an Azure virtual machine named VM1 on a virtual network named VNet1. Outbound traffic from VM1 to the internet is blocked.

You have an Azure SQL database named SqlDb1 on a logical server named SqlSrv1.

You need to implement connectivity between VM1 and SqlDb1 to meet the following requirements:

1. Ensure that all traffic to the public endpoint of SqlSrv1 is blocked.

2. Minimize the possibility of VM1 exfiltrating data stored in SqlDb1.

What should you create on VNet1?

A. a VPN gateway

B. a service endpoint

C. a private link

D. an ExpressRoute gateway

Correct Answer: C

Azure Private Link enables you to access Azure PaaS Services (for example, Azure Storage and SQL Database) and Azure hosted customer-owned/partner services over a private endpoint in your virtual network.

Traffic between your virtual network and the service travels the Microsoft backbone network. Exposing your service to the public internet is no longer necessary.

Reference:

https://docs.microsoft.com/en-us/azure/private-link/private-link-overview

NEW QUESTION 5:

HOTSPOT

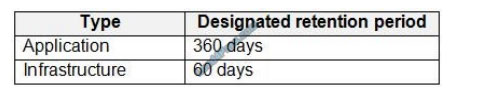

You have an Azure Data Lake Storage Gen2 account named account1 that stores logs as shown in the following table.

…

Please get the remaining free exam questions and answers via the DP-300 PDF:https://drive.google.com/file/d/1w9T2CjFphFY4HVGblS5-8eOHKgtLL6y1/

Well above are all DP-300 exam questions shared online. Please download DP-300 dumps: https://www.leads4pass.com/dp-300.html (209 Q&A) to help you pass the exam successfully.

Summarize:

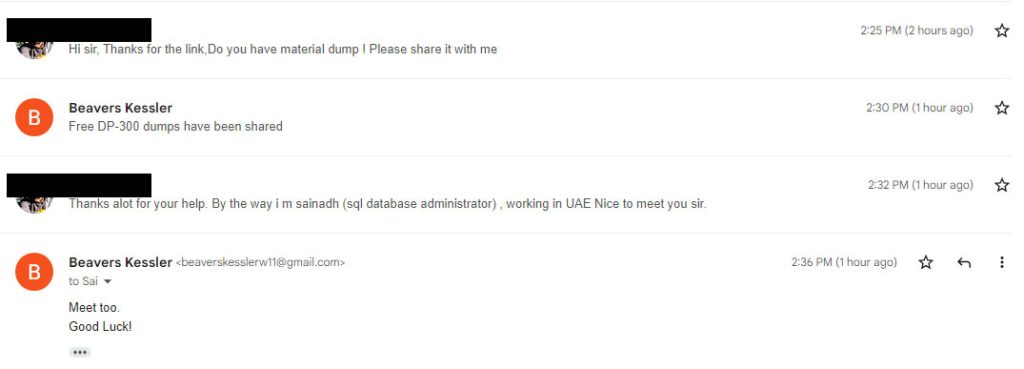

This is my friend’s thanks to me:

Because this friend’s geographic location is unique, and past Microsoft certifications are valid for a relatively long time, it’s understandable that he doesn’t know the latest information.

But as of June 30, 2021, all newly acquired Microsoft certifications will only be valid for one year from the date of acquisition. If you get your certificate before this date, you can use it for a full two years.

So friends who read this article, when you get the sql database administrator DP-300 certification, you must remember to check the validity period, but you don’t need to worry too much, because Microsoft will send you an email before it expires.

Finally good luck to everyone.